BCSC 206: 2019-2020 Projects

Project Description

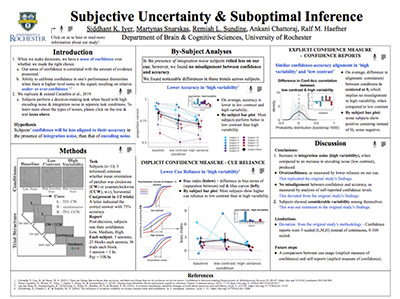

Background: Humans (and animals) constantly have to make decisions based on information obtained from the outside world – be it through their eyes, ears, or other senses. Optimal decision-making in many case requires the computation of information not simply about the target itself (e.g. the identity of a person or object) but also knowledge of one's uncertainty about being correct. This is important for instance, to avoid catastrophic outcomes ("am I sure I didn't see a car coming from the left?" before deciding to cross a street), but also to combine the information from one source with the information from other sources. While existing studies often find that humans are close to optimal in low-level perceptual tasks, they are sometimes far from optimal in higher-level cognitive tasks – a discrepancy that is not well-understood.

PI: Haefner

Paper: Herce Castañón, S., Moran, R., Ding, J., Egner, T., Bang, D., & Summerfield, C. (2019). Human noise blindness drives suboptimal cognitive inference. Nature Communications, 10(1), 1–11. https://doi.org/10.1038/s41467-019-09330-7

Summary: Subjects are given the task to identify the average orientation of a set of patterns on the screen, and to indicate their confidence in their choice. Depending on how the difficulty of the task is varied – either by adding low-level noise, or by adding high-level variability – subjects change from close to optimal to suboptimal in their decision-making, and from appropriately confident to overconfident.

Impact: This is a very recent (2019) study that has not been replicated so far. It raises a number of interesting questions about how and why these two different kinds of uncertainty are processed differently by the brain, as well as interesting methodological questions about how to best measure confidence. Regardless of the outcome of the replication project in the first semester, a number of interesting extensions are possible in the second semester.

Skills Required

Simple stimulus/response programming in Matlab, regression analysis

UR Expo Poster (PDF)

Project Description

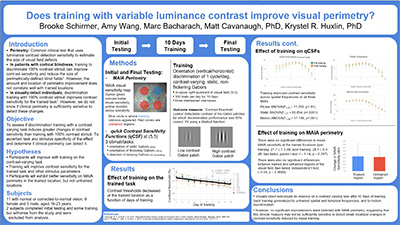

Background: For the longest time, it was thought that stroke-induced damage to the primary visual cortex in humans caused an immediate, all-encompassing and permanent loss of conscious vision in the contralateral hemifield. This condition affects nearly 1% of the general population over age 50 and unlike motor stroke patients, visual stroke patients are offered no therapies or hope of ever recovering any of the vision lost beyond a short, early period post-stroke, when they might experience some spontaneous improvements. Over the last 10 years or so, our lab has begun to dismantle many of the assumptions in the field of cortically-induced blindness – first by showing that it is possible to recover lost vision beyond the period of spontaneous recovery, with perceptual training paradigms. Second, we showed that the vision loss is not stable and permanent beyond this period, but rather that vision continues to degrade if no training is administered. Recently, we began to study the early period post-stroke and uncovered a way of distinguishing and measuring spontaneous from training-induced improvements in vision. However, we now need to verify these results by applying our new analytical methods over a larger data sample.

PI: Huxlin

Paper: Cavanaugh, M.R. and Huxlin, K.R. (2017) Visual discrimination training improves Humphrey perimetry in chronic cortically-induced blindness. Neurology 88(19): 1856 – 1864.

Also useful to read: Das A. Tadin, D. and Huxlin, K.R. (2014) Beyond blindsight: properties of visual re-learning in cortically blind fields. Journal of Neuroscience 34 (35): 11652 - 11664.

Fall Semester Study: Replicate the Cavanaugh & Huxlin paper's visual field analysis and findings of larger visual detection performance improvements in chronic visual stroke patients who underwent visual training compared to those who did not train. Shadow lab members and assist in testing patients when they undergo testing and training in the lab to better understand differences between clinical visual field tests and psychophysical tests of visual performance.

Summary: Students will learn to write a Matlab program that creates a unitary, interpolated "field" of visual luminance detection performance across the two eyes. They will create this unitary field from clinical data measured monocularly, over the central ~24deg of the visual field, before and after visual training in a 1st group of stroke patients. Students will compare these data with those from a 2nd group of patients who did not undergo training, but had repeat visual tests done at about a 6 months interval. Students will learn how visual performance is measured using clinical instruments, how this compares with psychophysical measures in the lab, as well as how visual training is performed to attain visual recovery in such patients.

What next: In the second semester of this class, students can choose from a range of follow-up projects, using the skills and analytical approaches they learned in the first semester. Potential projects revolve around measuring and modeling the magnitude of change in visual performance that occurs spontaneously after stroke. This will require analyzing visual field measurements that are repeated in the same subjects over time, with the goal being to quantify the changes (the only published data are qualitative). Questions asked will include: what are the characteristics of the early spontaneous recovery described in these patients (over what area of the visual field, how far from the border of the intact visual field, how uniform across space, etc.), once patients reach 6 months post-stroke, absent intervention, does vision really degrade? What are the characteristics of that degradation (rate, spatial extent, etc.)? How does training early versus late after stroke compare in terms of visual field improvements attained?

Skills Required

Programming skills, basic statistics, data analysis and working with human subjects.

UR Expo Poster (MP4)

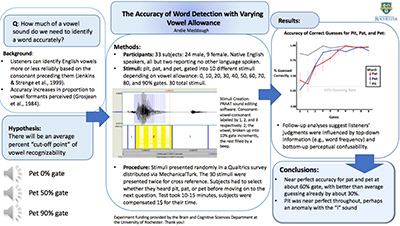

Project Description

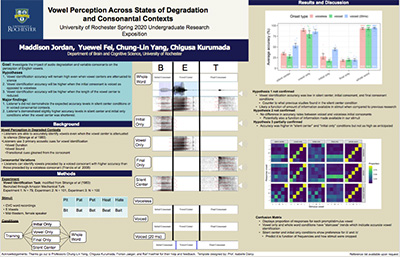

Background: Understanding natural speech is an amazing feat. Under strong pressure to rapidly form complex articulatory gestures (4-6 syllables per second), speakers often produce words with varying features (e.g., coarticulation with neighboring sounds, omission of acoustic features) that are not observed in carefully articulated speech. To understand how human listeners identify speech sounds from such a continuous, fleeing, signal, the current project aims to replicate a classic study by Strange et al., (1983). This study demonstrated that native listeners are capable of correctly identifying ten English vowels by detecting subtle formant transitions and their timing, even when formant information that is normally considered to be defining features of vowels was removed. This finding motivated a plethora of work that unveiled multiple information sources that listeners leverage to achieve robust speech perception.

PI: Yang and Kurumada

Papers: Strange, W., Jenkins, J. J., & Johnson, T. L. (1983). Dynamic specification of coarticulated vowels. The Journal of the Acoustical Society of America, 74(3), 695–705. https://doi.org/10.1121/1.389855

Also useful to read: Rogers, C. L., & Lopez, A. S. (2008). Perception of silent-center syllables by native and non-native English speakers. The Journal of the Acoustical Society of America, 124(2), 1278–1293. https://doi:10.1121/1.2939127

Study: During the first semester, students are trained on acoustic manipulation of speech sounds using Praat and creating experimental stimuli. Students will be then conducting a series of human perception experiments using the online survey platform Amazon Mechanical Turk, which allows researchers for a large-scale experimentation with diverse populations. Participants listen to speech sounds and answer perception question (e.g., Did you hear /beeb/ or /bib/?). In the current replication study, students will collect information about participants' language background with special attention to native vs. non-native proficiency in English. Rogers and Lopez (2008) found intriguing differences between native vs. non-native listeners in their accuracy of vowel perception. Even those who were exposed to English from their early childhood lagged behind native listeners. Understanding precise ways in which native vs. non-native listeners differ will provide insight into roles of experiences and age of acquisition in language learning.

Impact: This is an influential study on the impact of timing and formant transition information on vowel perception in natural speech. Participating students will be exposed to the rich literature of speech perception and comprehension, and receive rigorous training on acoustic manipulation of speech sounds and psycholinguistic experimentations.

Skills Required

Programming skills, basic knowledge of phonetics and phonology, competency in Praat (the acoustic analysis/manipulation software) and R (the statistical software) preferred but not required.

UR Expo Poster (PDF)