See & Grasp Data Set

This website contains the See & Grasp data set introduced from Yildirim & Jacobs (2013). It is a data set containing both the visual and haptic features for a subset of "Fribbles".

Fribbles are complex, 3-D objects with multiple parts and spatial relations among the parts. Moreover, Fribbles have a categorical structure---that is, each Fribble is an exemplar from a category formed by perturbing a category prototype. The unmodified 3-D object files for the whole set of Fribbles can be found on Mike Tarr's (Department of Psychology, Carnegie Mellon University) web pages. We slightly modified these object files so that the connections among parts would be stronger. An innovative aspect of our research is that we have obtained physical copies of Fribbles fabricated using an extremely high-resolution 3-D printing process. The See & Grasp data set is based upon this visual and physical copies of Fribbles. We are sharing our data set in the hope that it will become a major resource to the cognitive science and computer science communities interested in perception.

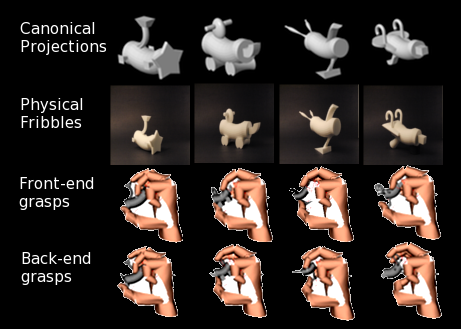

The See & Grasp data set contains 40 items corresponding to 40 Fribbles (10 exemplars in each of the 4 categories). There are 3 entries associated with each item. One entry is the 3-D object model for a Fribble. The second entry is an image of a Fribble rendered from a canonical viewpoint so that the Fribble's parts and spatial relations among the parts are clearly visible. (Using the 3-D object model, users can easily generate new images of a Fribble from any desired viewpoint.) The third entry is a way of representing a Fribble's haptic features. It is a set of joint angles obtained from a grasp simulator known as "GraspIt!" (Miller & Allen, 2004). GraspIt! contains a simulator of a human hand. When forming the representation of a Fribble's haptic features, the input to GraspIt! was the 3-D object model for the Fribble. Its output was a set of 16 joint angles of the fingers of a simulated human hand obtained when the simulated hand "grasped" the Fribble. Grasps—or closings of the fingers around a Fribble—were performed using GraspIt!'s AutoGrasp function. Each Fribble was grasped twice, once from its front and once from its rear, meaning that the haptic representation of a Fribble was a 32-dimensional vector (2 grasps x 16 joint angles per grasp). To be sure that Fribbles fit inside GraspIt!'s hand, their sizes were reduced by 67%. The Figure above illustrates all three kinds of entries for one exemplar from each of the four categories.

The downloadable file contains two folders and a text file. The folder "3Dmodel"' contains the 3d computer models in two different formats. The folder "3Dmodels/STL" contains the STL files, the folder "3Dmodels/VRML" contains the WRL files, and the folder "3Dmodels/OBJ" contains the OBJ files for the 40 Fribbles. Filenames indicate the identity of the Fribble (e.g., File "1.WRL," "1.STL," and "1.OBJ" contain the 3-D mesh for object 1, and so on). The folder "2Dimages" contains the 2D canonical projections for each of the Fribbles (again, filenames "1.jpeg" is the canonical projection for object 1). Finally, the file "Haptic.csv" contains the haptic features for each of the Fribbles. It is a csv file with 40 rows, with 32 numbers in each row. For each row, each column is a joint angle (out of 16 joint angles) from one of the two grasps.

Please cite the following paper in relation to the See & Grasp data set.

Yildirim, I. & Jacobs, R. A. (2013). Transfer of object category knowledge across visual and haptic modalities: Experimental and computational studies. Cognition, 126, 135-148.

Citation for GraspIt!:

Miller, A., & Allen, P. K. (2004). Graspit!: A versatile simulator for robotic grasping. IEEE Robotics and Automation Magazine, 11, 110-122.